Association Rule Mining

Association Rule Mining is an unsupervised learning. The ARM algorithm can discover the relationship between items in the transaction dataset, and it can be applied to multiple scenarios in the real world. The best known example is shopping analytics, which digs into consumers’ transaction records to find correlations between products. As a result, it improves sales volume by recommending relevant products to customers. Therefore, ARM is an important and useful data analysis technique.

The Rules

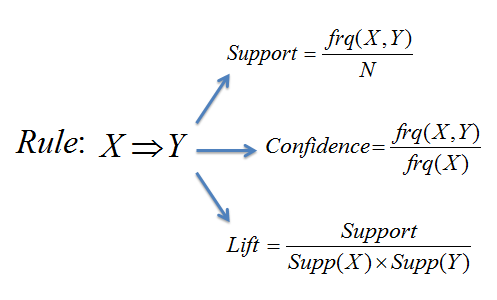

The association rule represents the pattern found in the dataset. It can be represented by $X \to Y$. (It can be expressed using an if-then literal expression.). Here X is called antecedent/left-hand-side and Y is called consequent/right-hand-side. There are three commonly used metrics when Using ARM, which are Support, confidence, and lift. They can be used to quantify the association rules between things, so as to reflect the usefulness of the rules.

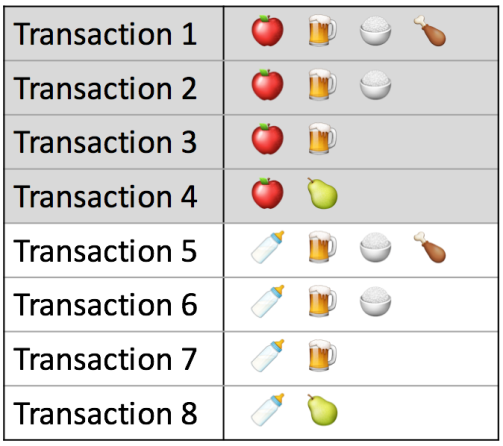

Here is an example transaction table plot below, to prepare for introducing the support, confidence, and lift.

Support

Assume a rule $Rice \to Chicken$ based on the figure above. $Support(Rice, Chicken)$ means how often items in $Rice$ and items in $Chicken$ occur together relative to all transactions. In other words, it represents the ratio of the number of product combinations of occurrences to the total number of occurrences.

$$Support = \frac{frq(Rice, Chicken)}{N} = \frac{2}{8} = 0.25$$

Confidence

Using the same rule above $Rice \to Chicken$. $Confidence(Rice, Chicken)$ means how often items in $Rice$ and items in $Chicken$ occur together relative to transactions that contain Rice. In other words, it represents the probability that when a user buys Rice, the user will purchase Chicken.

$$Confidence = \frac{frq(Rice, Chicken)}{frq(Rice)} = \frac{2}{4} = 0.5$$

Lift

Still Using the same rule above $Rice \to Chicken$, $Lift(Rice, Chicken)$ means the extent to which the appearance of $Rice$ increases the appearance probability of $Chicken$.

$$Lift = \frac{Support(Rice,Chicken)}{Support(Rice) * Support(Chicken)} = \frac{0.25}{0.5 * 0.25} = 2 $$

Apriori Algorithm

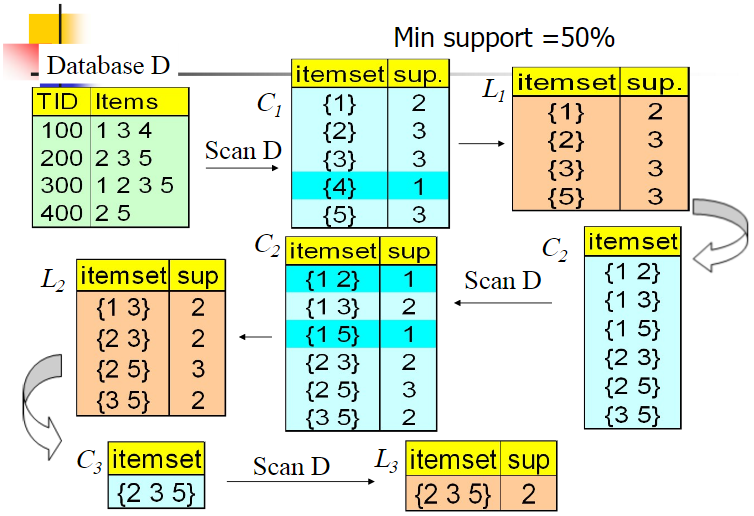

Apriori Algorithm is one of the most common algorithms used by association rule mining to find frequent itemsets. These frequent itemsets help data analysts make some decisions. Using Support, Confidence, or Lift to evaluate itemsets. The goal of Apriori is to find the maximum set of K frequent itemsets. Here is an example below, using Support as a criterion for evaluating frequent itemsets. After that, Apriori uses an iterative method to find the candidate itemset and its corresponding support and drop out item set with lower support to obtain frequent itemset and so on.

Plan For The Project

Focusing on exploring the impact of a goalkeeper’s save ability on a club’s ranking. Selecting the features of conceding goals, the ratio of goals saving, the game minutes, performance rating, and the club ranking, then using the ARM to study the correlation between the goalkeeper’s saving ability and the team’s ranking. Also, setting minimum support, confidence and other parameters ensures the reliability of the association rules have been discovered. Finally, using frequent itemset analyze the relationship between goalkeepers ‘saving ability and clubs’ ranking.

Resource

Image 1: https://images.app.goo.gl/88PuEgtbmvfMNzXW7